Event Camera-Based Real-Time Gesture Recognition for Improved Robotic GuidanceMuhammad Aitsam, Sergio Davies and Alessandro Di Nuovo

The full paper can be found here (https://ieeexplore.ieee.org/document/10650870)

Some of the papers introduced here are available as open access, while others may require a subscription or membership to view the full text. Access may depend on your institution's or organization's subscription status, so please refer to each link for details.

Abstract

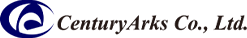

Recent breakthroughs in event-based vision, driven by the capabilities of high-resolution event cameras, have significantly improved human-robot interactions. Event cameras excel in managing dynamic range and motion blur, seamlessly adapting to various environmental conditions. The research presented in this paper leverages this technology to develop an intuitive robot guidance system capable of interpreting hand gestures for precise robot control. We introduce the "EB-HandGesture" dataset, an innovative high-resolution hand-gesture dataset used in conjunction with our network "ConvRNN" to demonstrate commendable accuracy of 95.7% in the interpretation task, covering six gesture types in different lighting scenarios. To validate our framework, real-life experiments were conducted with the ARI robot, confirming the effectiveness of the trained network during the various interaction processes. This research represents a substantial leap forward in ensuring safer, more reliable and more efficient human-robot collaboration in shared workspaces.

Ph.D. Muhammad Aitsam, Sheffield Hallam University and Smart Interactive Technologies Research Lab

LinkedIn (https://www.linkedin.com/in/muhammad-aitsam-653894105/)

Google Scholar (https://scholar.google.co.uk/citations?user=8zEI5F8AAAAJ&hl )