"CenturyArks Award", SilkyEvCam® Event-based Vision Camera Application Idea and Algorithm Contest 2024

"Object-based Visual SLAM with Stereo Event Camera" Bao Runqiu, Graduate School of Engineering, The University of Tokyo

Digest

(1) Outline o the ideas

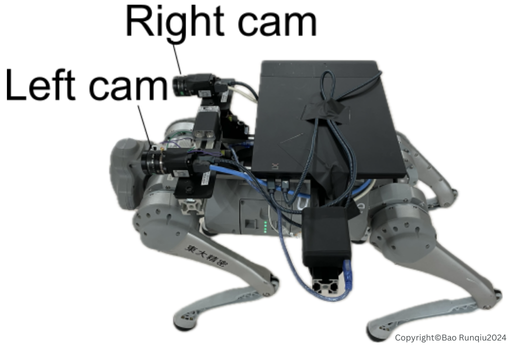

This proposal aims to develop a four-legged robot (Figure 1) that can be operated under various light conditions or in situations that require rapid movements by utilizing the wide dynamic range and high temporal resolution of an event-based vision camera (hereinafter referred to as “event camera”). The robot is equipped with a one-board microcontroller called Arduino. It controls the left and right cam (rotating disk for converting rotation into linear motion) based on commands from the microcontroller, allowing the robot to manipulate its movement, including speed and direction.

The proposed system incorporates data captured by event cameras into a robot control system called SLAM (Simultaneous Localization and Mapping), which simultaneously estimates the robot's surrounding environment (surrounding obstacles, walls, etc.) and its own relative position within that environment. By incorporating data captured by an event camera into a robot control system called SLAM (Simultaneous Localization and Mapping), which simultaneously estimates the relative position of the robot in its environment (obstacles and walls around it) and its own position in that environment, we are conducting demonstration tests of robots that meet the above requirements.

(2) Demonstration experiment using SLAM with an event camera

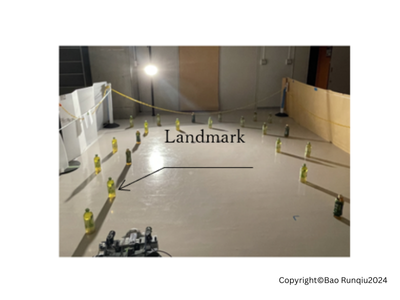

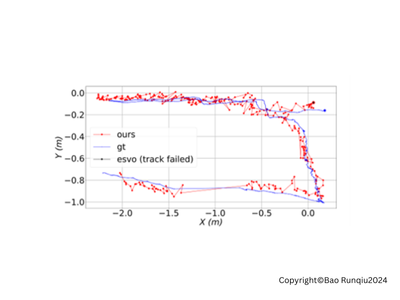

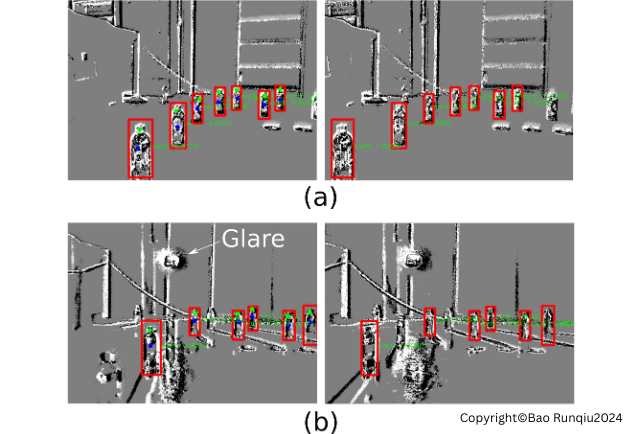

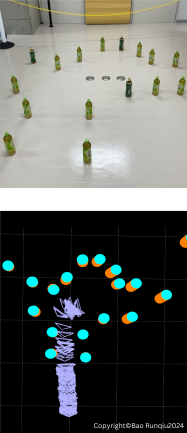

Figure 3 compares the predetermined target path (gt(ground truth)) and the path actually followed by the robot controlled and operated by SLAM with an embedded event camera (hours) in an environment with a large difference between minimum and maximum illumination as shown in Figure 2.

Although this is only a verification experiment for the purpose of demonstration, we selected this work for the “CenturyArks Award” because there are many needs for SLAM, and we expect it to be developed into a practical application.

(3) Comments of the award

I am very grateful to receive this award. I am also very grateful for the free loan of one event camera for my experiment. I believe that the event camera is an indispensable vision sensor for robots. We are researching its use in extreme scenarios such as HDR lighting + fast motion without delay and without tuning. Please keep us updated with your latest event sensors.